If you're responsible for developing an app or digital product, you'll know how important it is to test your app for bugs each time you release it. You'll also know how expensive and time-consuming this process can be.

Quality control can consume up to 40% of revenue, according to IBM(though our data shows it's typically 15-20% for mobile apps), I started wondering: could AI agents make testing both more efficient and more enjoyable for our testing team?

While I haven't found a fully working solution yet, I've been experimenting with some seemingly viable approaches that could transform how we test mobile apps.

Understanding Agentic AI Testing

Gartner predicts that Agentic AI will be a very hot topic in 2025. But what makes it different from what we're using now?

If you've used ChatGPT, you're familiar with its request-and-response pattern. Agentic AI works differently - it can operate autonomously toward a goal without constant human guidance. Think of it as an independent tester that can:

- Figure out different ways to complete tasks

- Adapt when it encounters problems

- Make decisions about what to test next

- Work without detailed step-by-step instructions

This is quite different from traditional automation because the agent isn't following a rigid script. For example, if it encounters an error, it might try rebooting the app or find alternative paths to complete the same task.

We currently use Sofy for our UI automation testing, and I'm hoping they're looking at this stuff.

The Industry Is Moving Towards AI Testing

Major UK banks are already pushing the boundaries of testing automation. Take Lloyds Banking Group - they've adopted an 'Automation first' philosophy, with dedicated Software Development Engineers in Test (SDET) using frameworks like WDIO, Selenium, Cucumber, and Appium. But even these sophisticated tools require significant human oversight and maintenance. AI agents could take this automation to the next level, reducing the manual workload on their testing teams while improving coverage.

Why Goal-Driven Testing Matters

Traditional testing requires writing detailed test cases with every single step spelled out. Even with automation tools like Selenium, someone needs to code all these steps. With a goal-driven agent, you simply tell it what the user should achieve, and it figures out how to get there. As you can imagine, this could massively reduce the work involved in test creation and maintenance.

Three Experimental Approaches I've Tried

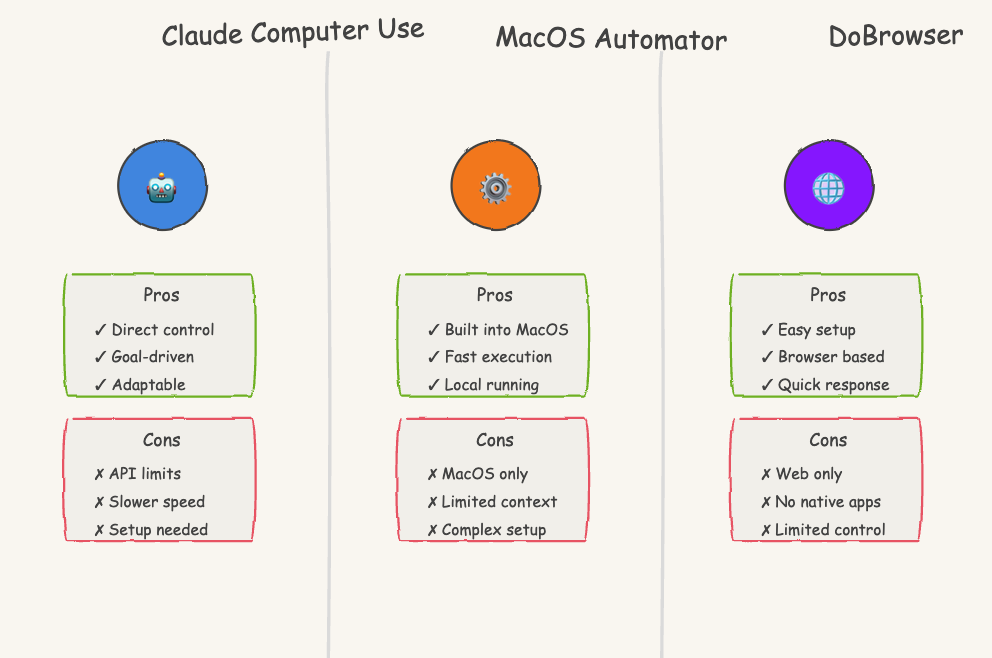

Here's a quick overview of each experiment. Excuse the naff diagram!

1. Claude: The Most Promising Approach

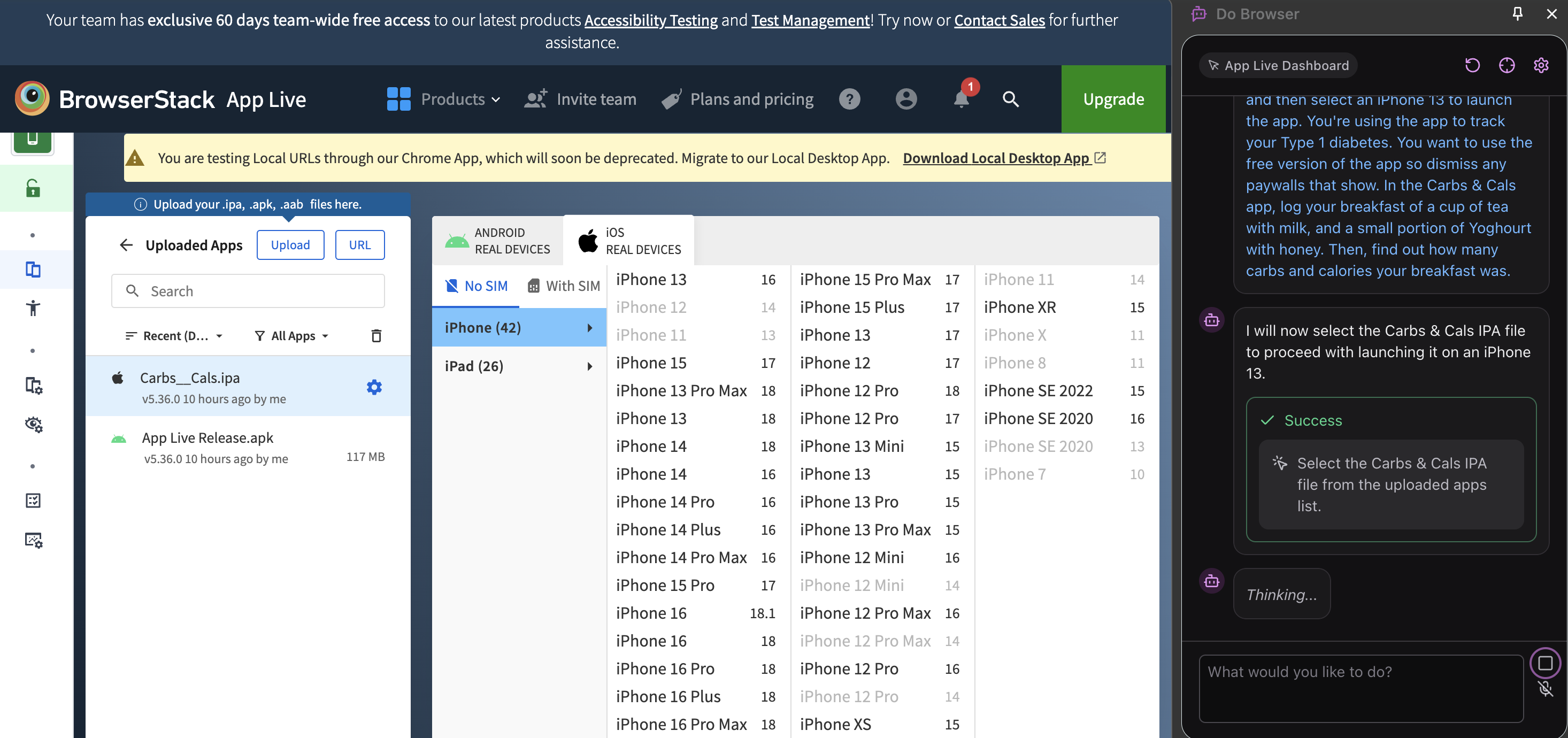

My first and most promising experiment used Claude and its new "computer use" feature. Setting it up was surprisingly straightforward:

- Downloaded and set up Docker

- Connected it with BrowserStack for app live testing

- Configured Claude for computer use

Here's the actual prompt I used:

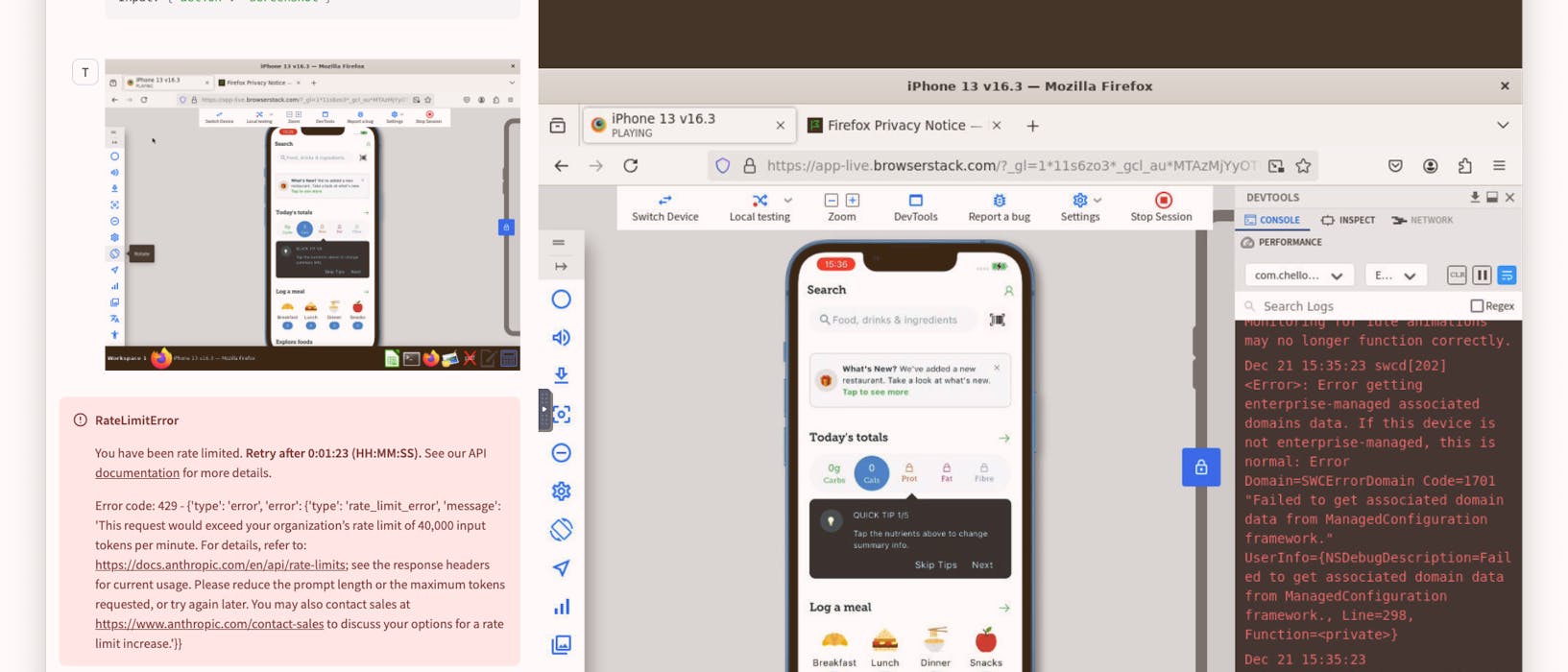

Act as a mobile tester.

Use the open firefox window to use the iPhone device in the BrowserStack window (take a fresh screenshot to see that)

You want to use the free version of the app so dismiss the paywall.

In the Carbs & Cals app, log your breakfast of a cup of tea with milk, and a small portion of yoghurt with honey.

Then, find out how many carbs and calories your breakfast was.

Wait 5 seconds between screenshots so you don't exceed the 40,000 token Claude rate limit.

Here's what happened:

As the video shows, the results were pretty cool.

Claude navigated through the onboarding flow, skipped the paywall (after I added that to the prompt), and started adding breakfast items. While it ultimately got stuck trying to add items to the diary, watching it figure things out was impressive. The main challenges were:

- API rate limits (I'll need to upgrade my tier)

- Some UI elements being blocked by BrowserStack's interface

- Occasional misunderstandings of app features

2. MacOS Automator: A Creative Alternative

My second experiment involved MacOS Automator combined with ChatGPT. The basic workflow was:

- Create a script that takes a testing goal

- Launch the app in the simulator

- Send screenshots to ChatGPT with the goal

- Convert ChatGPT's instructions into automated actions

While I got it working for basic tasks like finding buttons and filling search boxes, it struggled with maintaining context between actions. Still, with some more development time, this approach could be viable.

3. DoBrowser: A Quick Test

My final experiment used DoBrowser, a Chrome extension for automating browser tasks. While it seemed promising for browser-based testing, sadly it couldn't interact with the device screen in BrowserStack.

However, its speed was impressive - if only it could be adapted for mobile testing. At least the experiment was cheap - I only stumped up £25 to give the Chrome extension a whirl!

The Business Case for AI Testing

The financial impact of AI testing is compelling. Recent studies reported by Forbes show companies implementing AI-driven testing are seeing ROI improvements of 200-300% over traditional methods in just the first year. This matches what we're seeing in our experiments:

- Reduction in test-case maintenance costs by 30-50%

- Cut in automation costs by 30-60%

- Increase in time to market by up to 50%

These improvements come from reducing the need for specialized automation engineers (currently commanding £50K-£150K salaries in the UK) and dramatically cutting down the time spent maintaining test cases.