Our Director of User Experience, Anna Scandella, recently spoke at the GreenTech Gathering in Leeds about the drawbacks of using AI to make User Experience decisions. The following article is a summary of her talk.

Artificial intelligence is transforming the User Experience (UX) landscape and it is important, as researchers, that we learn to work with new technologies. Whilst AI tools can be incredibly helpful with certain tasks, understanding its limitations is key.

To ensure we are designing the right products and services that effectively meet the needs of all audiences, we have to gather robust data that gathers a wide range of opinions and experiences.

UX is complex because people are complex. Real users interact unpredictably with digital tools. The challenge is not just understanding what users do, but why they do it. Despite its sophistication, AI struggles with this critical aspect.

Why using AI in UX research is a problem

Shortcutting the research process by using AI is a real temptation. Especially when you consider the top three reasons that research gets overlooked in any project:

- User research is too expensive

- We don’t have time for user research, we need to launch quickly

- We know our users well enough already

AI can feel like a perfect solution. An infallible tool that will speed up decision making and help you jump into developing the next shiny feature. However, this is a dangerous approach that is likely to result in you prioritising the wrong things and wasting valuable time and money.

When teams use guesswork, 80% of software goes to waste.

The main reason to conduct user research is to be surprised. You don’t want to recruit “average humans”.

The Assumption vs The Reality

Take the example of one of our clients, Carbs & Cals, a diabetes management app.

As part of our ongoing product and roadmap development, we’ve recently been exploring new features that will encourage users to log and track their food intake.

We wanted to test the difference between AI-generated predictions and researching with actual users. To do this, we built and trained a custom GPT on our key target audience profile.

We asked the AI model to predict what percentage of users would actively engage with logging food they have consumed. The model predicted that 29% would, when we know from our product analytics, that only 18% of this core audience do.

This gap was significant enough to reveal how AI models can often misread behavioural intent.

Why AI Falls Short in UX Research

AI is highly effective at recognising patterns in large datasets, making it invaluable for tasks such as processing medical records, or predicting disease progression. However, its limitations become more apparent when it comes to human behaviour.

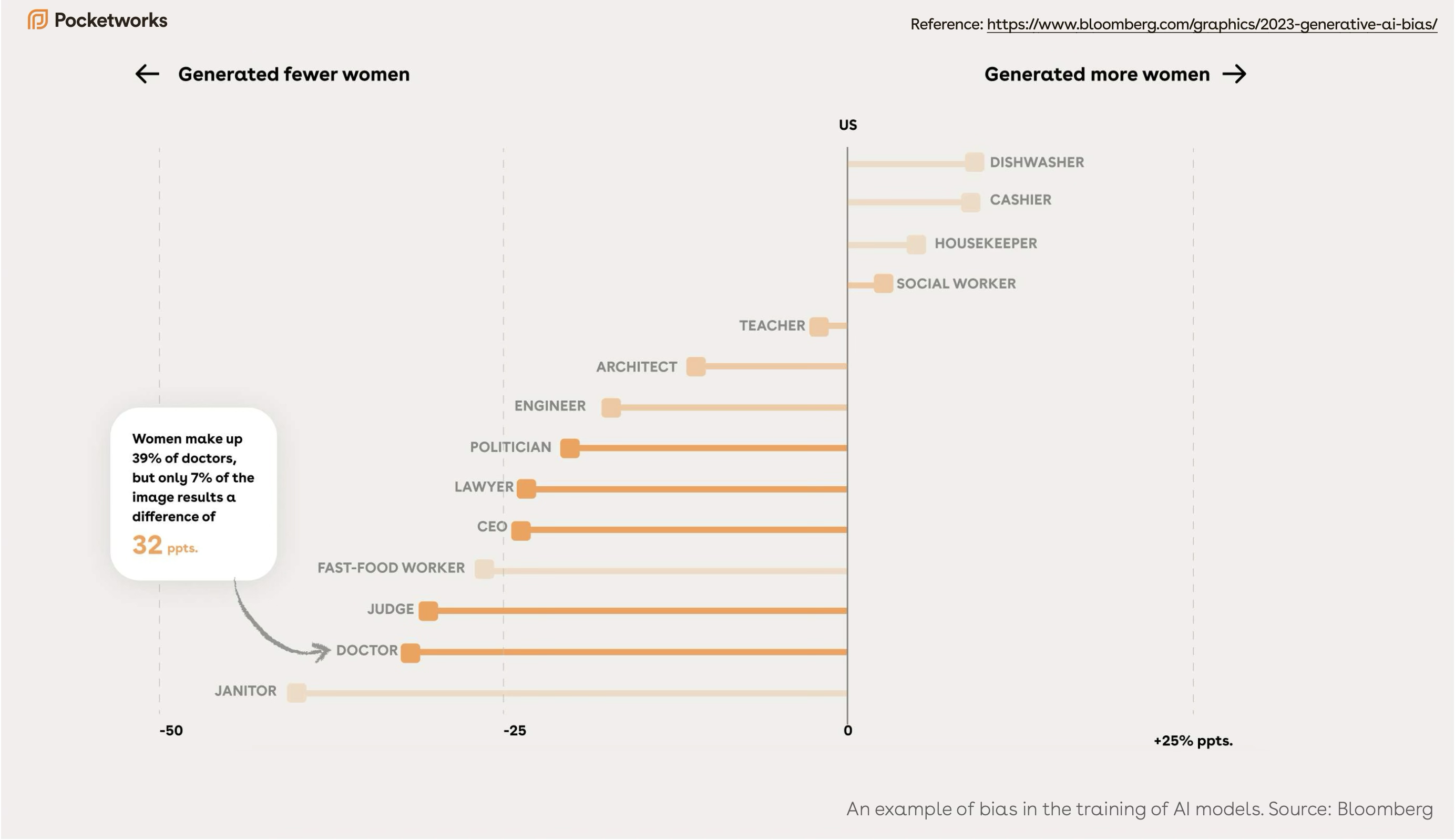

One of the core issues is the training data. Many AI models are built using historical datasets that fail to represent diverse populations. For example, women have been significantly underrepresented in clinical trials for decades. Research shows that only 33% of participants in cardiovascular trials are female, despite heart disease being a leading cause of death among women.

When AI models misinterpret user behaviour, they reinforce biases and drive flawed product decisions that could have serious consequences for patient care.

Gartner predicts that by 2026, AI models from organisations that operationalise AI transparency, trust, and security will achieve a 50% improvement in terms of adoption, business goals, and user acceptance.

AI’s Role in User Research

This doesn’t mean AI has no place in UX research. It can be a valuable tool for drafting surveys, identifying common themes, and analysing large datasets. It speeds up the research process and can highlight patterns that might otherwise go unnoticed. However, AI should never replace direct user research.

In healthcare, user behaviour is often influenced by deeply personal factors that AI cannot predict. A person may avoid logging food because of guilt or anxiety around eating habits, not because they dislike the idea. AI is unable to interpret these emotions accurately.

AI often struggles with sentiment analysis, frequently misreading sarcasm. A user might say, “This app is great at suggesting the wrong foods”, and an AI might register that as positive feedback (this actually happened to us when evaluating the sentiment of an app review). A human researcher would instantly recognise the sarcasm.